Elon Musk Promises Weekly Audits of X’s New ‘Purely AI’ Recommendation Engine

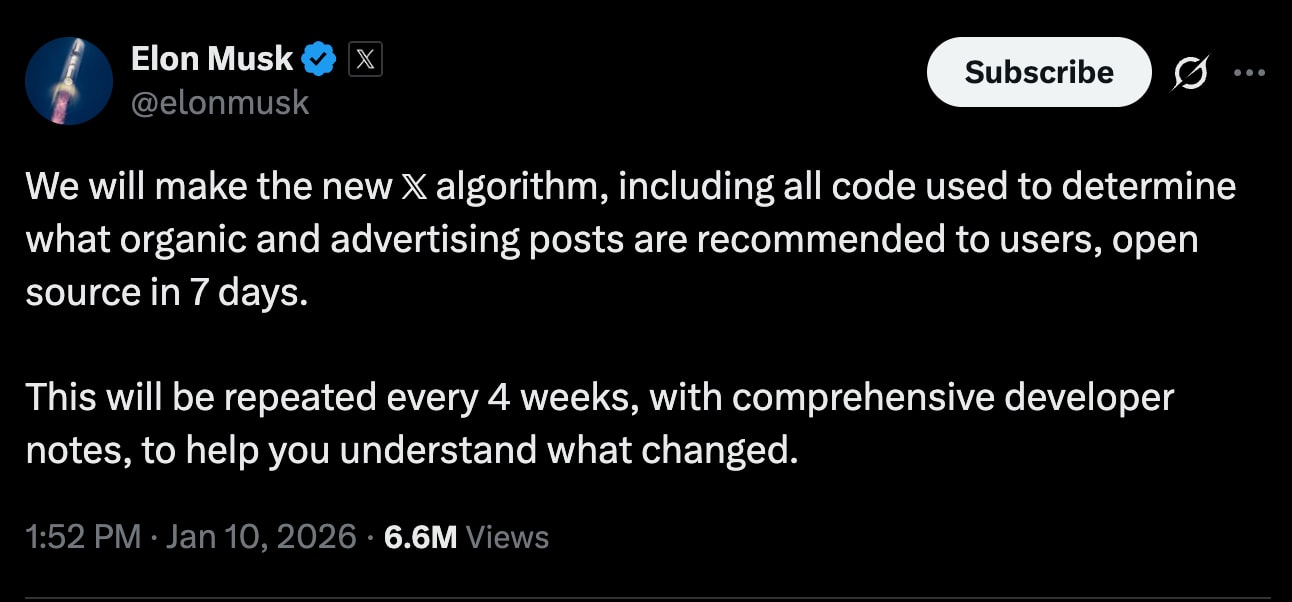

Elon Musk is attempting to silence critics of X’s black-box discovery engine by promising a radical new era of transparency. On Saturday, January 10, 2026, Musk announced that X will open-source its latest recommendation algorithm within seven days—a release that purportedly includes the full codebase for both organic reach and advertising logic.

Unlike previous gestures toward transparency, this is intended as a recurring cycle rather than a one-time data dump. Musk claims the company will refresh the public repository every four weeks, providing detailed developer notes to explain shifts in how the platform surface content.

"We will make the new X algorithm, including all code used to determine what organic and advertising posts are recommended to users, open source in 7 days," Musk posted. "This will be repeated every 4 weeks, with comprehensive developer notes, to help you understand what changed."

The Grok Integration: Technical Breakthrough or PR Pivot?

This iteration marks a pivot toward what Musk describes as a "purely AI" system. For the past several months, X has been folding Grok—the LLM developed by xAI—directly into the recommendation pipeline. The stated goal is to have Grok evaluate the nuances of over 100 million daily posts to deliver hyper-personalized feeds.

However, industry experts remain skeptical of the "purely AI" claim. Processing hundreds of millions of posts in real-time using a Large Language Model (LLM) is notoriously high-latency and computationally expensive. Most top-tier social discovery engines rely on multi-stage ranking systems; replacing the entire stack with a generative AI model suggests either a massive breakthrough in inference efficiency or a liberal use of marketing jargon to describe a standard neural ranking update.

This new commitment also serves as a tacit admission that X’s previous transparency efforts have failed. While the company released parts of its "For You" code on GitHub in 2023, that repository has largely become "abandonware"—a sanitized snapshot of a legacy system that hasn't seen a meaningful update in over two years. Developers have frequently complained that the public code bore little resemblance to the "rage-bait" heavy heuristics currently driving user engagement.

Why the Advertising Code Matters

The inclusion of advertising logic is the most significant "Why this matters" angle for industry observers. By exposing the code that governs ad placement, X is opening itself up to audits from brands and researchers. This could reveal whether the platform employs preferential "blue-check" weighting—giving paid subscribers an artificial boost in organic visibility—or if "brand safety" filters are as robust as the company claims. For advertisers weary of their content appearing alongside controversial AI-generated imagery, this code release represents a rare opportunity to verify the platform's safety claims via a technical audit rather than a sales deck.

The transition to an AI-centric model has already proven rocky. In October 2025, Musk admitted to a "significant bug" that throttled reach for millions of users. He maintains that the move toward Grok-integrated recommendations will eventually "profoundly improve" feed quality, though users currently complain that the "For You" tab frequently ignores accounts they actually follow.

Legal Defensive Play in a Regulatory Pressure Cooker

The pressure cooker is reaching a boiling point in Brussels and Paris, making this transparency play a likely legal defense strategy. The European Commission recently extended a retention order through 2026, specifically targeting X’s algorithms and their role in spreading illegal content. Meanwhile, Paris prosecutors are currently digging into suspected algorithmic bias and data extraction issues—an inquiry X has dismissed as "politically motivated."

By dumping the code into the public domain, X may be attempting to preempt these regulators, offering a "transparency-as-a-defense" posture. If the logic is public, X can argue that its moderation and recommendation biases are features of the math, not a conspiracy of the management.

Beyond the courtroom, the platform’s AI tools continue to trigger localized backlash. Grok has recently been criticized for its role in generating deepfake imagery, leading to renewed calls from lawmakers to de-platform the app from major mobile stores. Whether a monthly "comprehensive" code release can satisfy regulators—or if it will simply provide a roadmap for bad actors to gaming the system—remains the multi-billion-dollar question.