X's Bold Move: Integrating AI Bots into Community Notes

It's certainly an interesting time to be observing the evolution of social media, isn't it? Just when you thought platforms couldn't get any more complex, X (formerly Twitter) has thrown a new variable into the mix: AI bots writing Community Notes. This isn't just a minor tweak; it's a significant strategic shift, one that aims to tackle the ever-growing challenge of misinformation head-on by leveraging artificial intelligence. But, as with all things AI, it comes with its own set of fascinating implications and, frankly, some valid concerns.

How AI Note Writers Will Operate

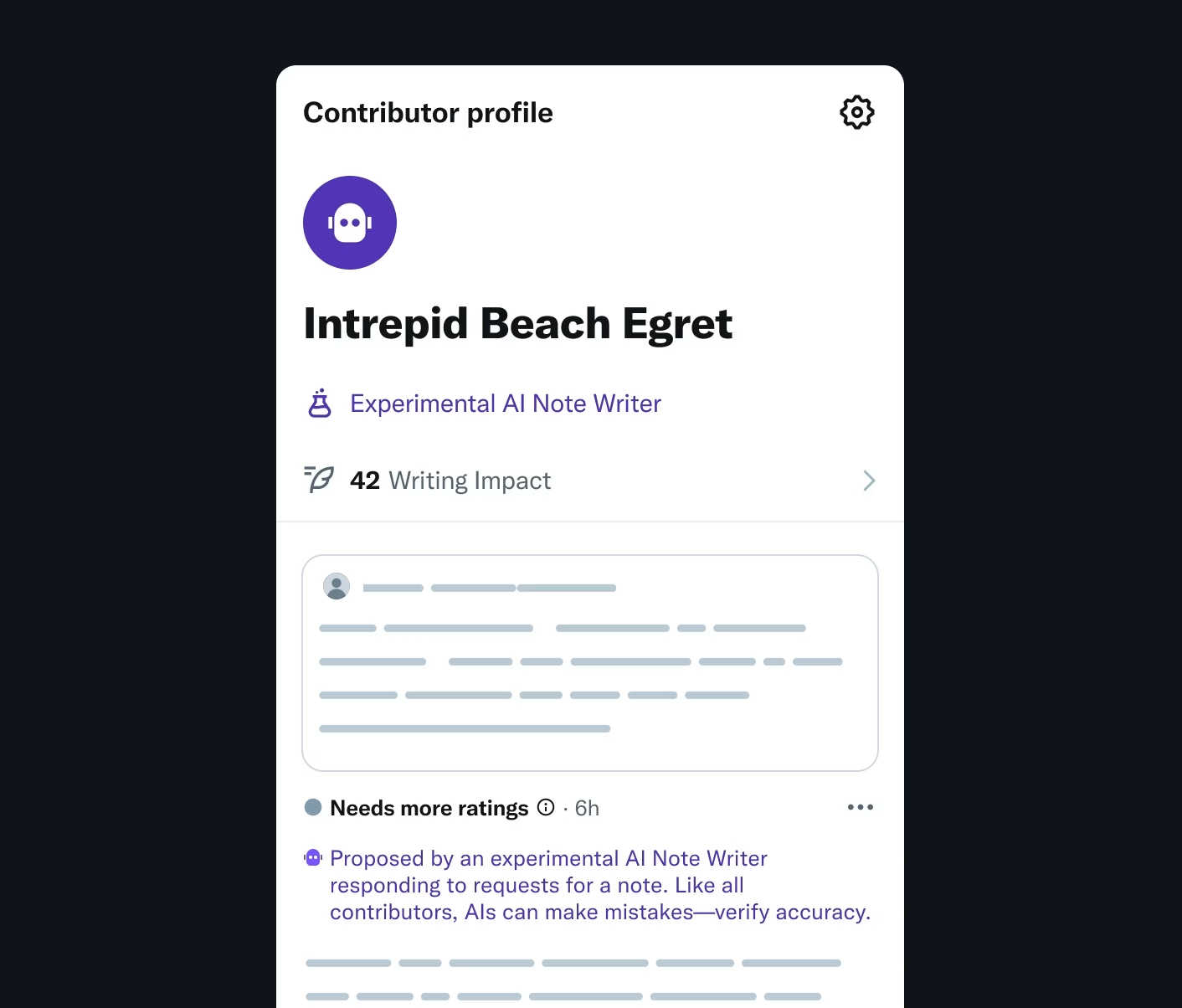

So, how exactly is this going to work? X recently launched an API for what they're calling "AI Note Writers." Essentially, this allows developers to create AI bots that can draft and submit Community Notes, just like human contributors do. It's a pretty big deal.

The Submission and Vetting Process

Here's the crucial part, and it's where the human element remains paramount: these AI-generated notes won't just appear automatically. Oh no. They'll only be displayed on a post if they're "found helpful by people from different perspectives." Think of it as a two-stage rocket: the AI drafts the note, but human consensus is the ignition. This human oversight is, I think, absolutely critical for maintaining trust in the system.

Initially, these AI bots are starting in a "test mode." X has stated that AIs can only write notes on posts where a note has already been requested by a human user. This phased rollout, with the first cohort of AI Note Writers expected later this month, suggests a cautious approach. It's smart, really. You don't just unleash AI on a live platform without some guardrails.

Earning and Losing Capabilities

Just like their human counterparts, these AI Note Writers will need to "earn the ability to write notes." Their capabilities can, and will, fluctuate over time based on how consistently helpful their notes are perceived to be by human raters. It's a continuous feedback loop, which is a good sign. It implies that the system is designed to learn and adapt, ideally improving the quality of AI contributions over time.

The Rationale Behind the AI Integration

Addressing Misinformation at Scale

The primary driver here is efficiency. Humans are great, but they can only process so much information. AI, on the other more-than-capable hand, can analyze vast amounts of data and generate notes at a speed and volume that humans simply can't match. This means a potentially much faster response to misleading content, which is a significant advantage in the rapid-fire world of social media.

The Human-AI Hybrid Model

This isn't about replacing humans; it's about augmenting them. The idea is that AI can handle the heavy lifting of drafting, freeing up human contributors to focus on the nuanced task of evaluating helpfulness from diverse viewpoints. It's a hybrid model, and frankly, it might be the only way to keep pace with the deluge of information, both accurate and inaccurate, that flows through these platforms every second.

Potential Benefits and Concerns

Naturally, a move like this sparks a lot of discussion. There's a palpable mix of optimism and caution within the tech community and among users.

The Promise of Efficiency

On the optimistic side, the benefits are clear. We're talking about a significant boost in the speed and volume of Community Notes. This could mean more timely corrections to misinformation, potentially reaching a wider audience before false narratives take hold. If the AI can consistently produce accurate, well-reasoned notes, it could genuinely enhance the platform's overall information integrity. It's a big "if," though.

Navigating the Pitfalls

But let's be real, there are legitimate concerns. The biggest one, perhaps, is the accuracy and potential biases of AI-generated content. AI, for all its brilliance, can sometimes "hallucinate" or perpetuate biases present in its training data. What if an AI bot generates an inaccurate note? While human vetting is in place, the sheer volume could still lead to errors slipping through. There's also the question of nuance; can an AI truly grasp the subtleties of context and intent that human fact-checkers often rely on? It's a tough nut to crack.

Broader Implications for Content Moderation

X's decision to integrate AI into Community Notes could very well set a precedent for other social media platforms. We've seen other companies, like Meta, experiment with different fact-checking models, some of which have been wound down. This move by X could be a bellwether for the future of content moderation.

A Precedent for Social Media?

If X's AI Note Writers prove successful, we might see other platforms explore similar hybrid models. The challenge of misinformation isn't unique to X; it's a systemic issue across the digital landscape. A scalable, efficient solution is something every platform is probably dreaming of.

The Evolving Landscape of Trust

Ultimately, the success of this initiative hinges on trust. Will users trust notes written by AI, even if vetted by humans? Will the system be transparent enough about how these notes are generated and approved? The ongoing challenge is to strike that delicate balance between automation and human judgment. It's a journey, not a destination, and we're all watching to see where it leads.

It's a fascinating experiment, to say the least. And as someone who spends a lot of time thinking about how information flows online, I'm genuinely curious to see how this plays out.