Tesla Restarts Dojo With Orbital Ambitions

Tesla CEO Elon Musk has signaled a revival of the Dojo supercomputer program, shifting the project’s focus from terrestrial FSD training to a decentralized orbital compute platform. The third-generation initiative, Dojo3, follows a brief hiatus in late 2025 when engineering focus shifted toward integrating AI5 silicon into the vehicle fleet. With the AI5 architecture now stabilized, Tesla is reallocating resources to build out a high-volume, space-bound compute fabric.

The restart hinges on the success of the AI5 chip, which Musk claims matches Nvidia’s Hopper-class performance in standalone configurations and Blackwell-class output when clustered. By moving to a nine-month development cadence, Tesla intends to bypass third-party hardware entirely, aiming for a 100% proprietary stack for Dojo3.

The Engineering Reality of Orbital Compute

The transition from terrestrial data centers to low-Earth orbit (LEO) introduces a set of physics-based hurdles that the previous Dojo iterations never had to face. While the draft proposal highlights 24/7 solar exposure, the thermal management of such a system is counterintuitive. In the vacuum of space, convection and conduction are non-existent; heat cannot simply be "blown away" by fans.

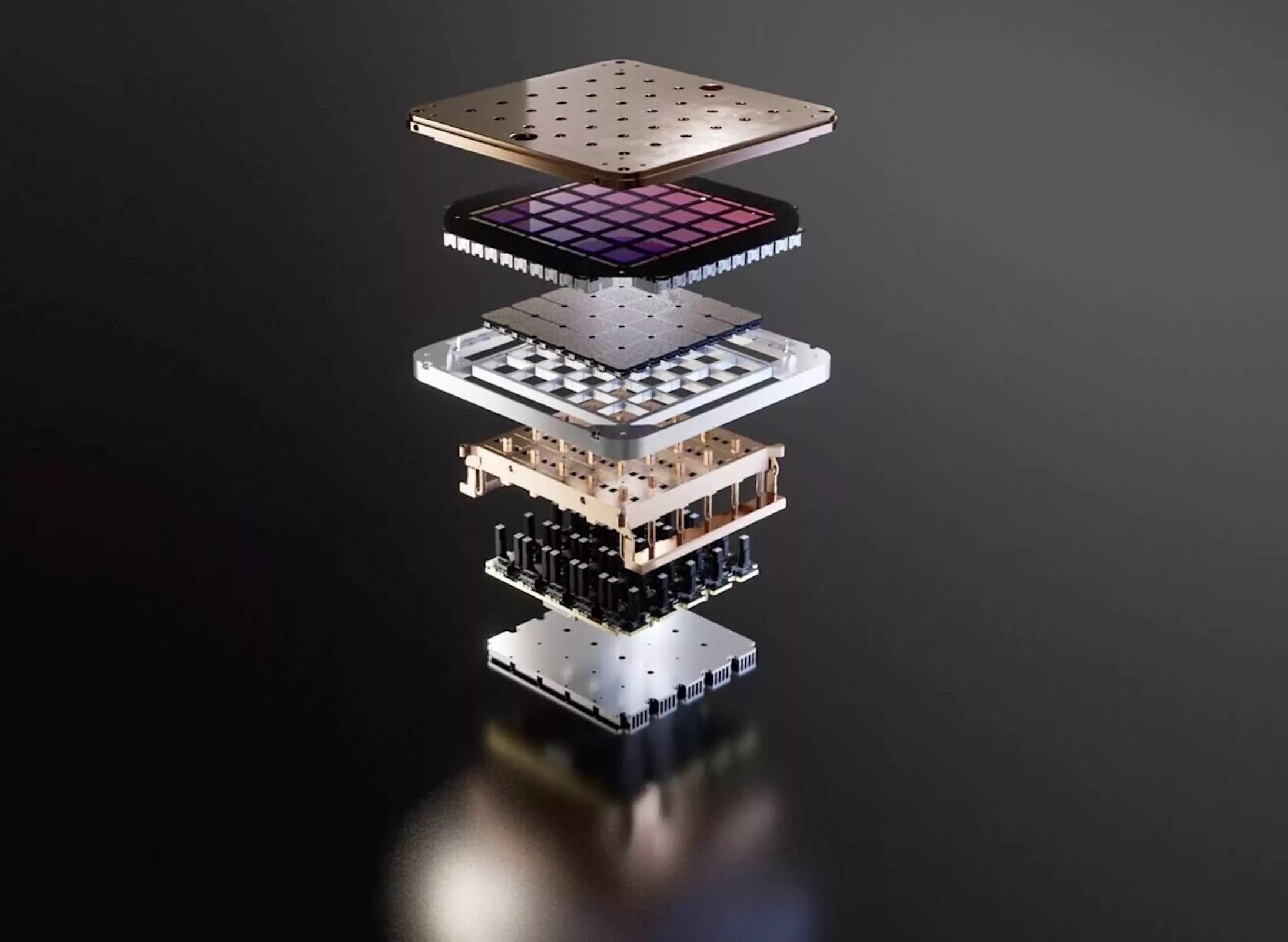

Dojo3 engineers must solve the problem of radiative heat dissipation, using massive radiator surfaces to shed the thermal energy generated by high-density silicon. Furthermore, operating at the 3nm or 2nm nodes in LEO exposes hardware to high-energy cosmic rays, necessitating sophisticated radiation hardening to prevent Single Event Upsets (SEUs)—bit-flips that can crash a training run or corrupt a weight matrix. This "space-grade" silicon requirement often necessitates larger feature sizes or redundant logic, potentially eating into the power-efficiency gains Musk has promised.

Latency vs. Infrastructure Independence

The strategic logic behind moving AI training off-planet remains a point of contention among infrastructure analysts. Terrestrial data centers benefit from high-bandwidth fiber interconnects and sub-millisecond latency. Even with a dense LEO constellation, an orbital Dojo network faces significant "round-trip" latency hurdles when ingesting the petabytes of video data generated by the Tesla fleet.

The move appears to be a hedge against the rising costs and regulatory hurdles of terrestrial power consumption. By operating independently of the global power grid, Tesla avoids the "behemoth" infrastructure footprints currently being contested by environmental regulators and utilities. However, whether the efficiency of orbital solar can offset the massive capital expenditure of launching heavy compute clusters remains unproven.

In-House Silicon and the Samsung Partnership

Dojo3 marks the end of Tesla’s "hybrid" hardware era. While the Dojo2 clusters relied on a mix of Tesla’s D1 chips and Nvidia GPUs, the new roadmap is strictly vertically integrated.

-

AI5: Currently in the deployment phase, providing the inference backbone for the Optimus robot and current vehicle fleet.

-

AI6: In the late stages of development, with production rumored to be handled by Samsung’s Texas-based foundry under a multi-billion dollar agreement.

-

AI7 / Dojo3: The first architecture designed specifically for the radiative cooling and power profiles of orbital deployment.

Tesla’s recruitment drive has pivoted to reflect this, with a focus on high-volume chip manufacturing and thermal engineers specialized in vacuum environments.

Market Implications and Launch Logistics

The success of Dojo3 would represent a fundamental shift in the AI hardware market, potentially reducing Tesla’s reliance on Nvidia at a time when chip demand remains at a record high. However, the logistical barrier is the "mass-to-orbit" ratio. To compete with terrestrial "gigafactories" of compute, Tesla will need to launch thousands of tons of silicon and radiator shielding.

Even with the declining launch costs provided by SpaceX’s Starship, the financial burden of replacing hardware—which cannot be easily serviced once in orbit—is a significant risk. If Tesla can solve the radiation-hardening and radiative-cooling puzzles, it may decouple AI scaling from the limits of the Earth’s power grid. If not, Dojo3 risks becoming an expensive experiment in an environment that is notoriously unforgiving to high-performance electronics.