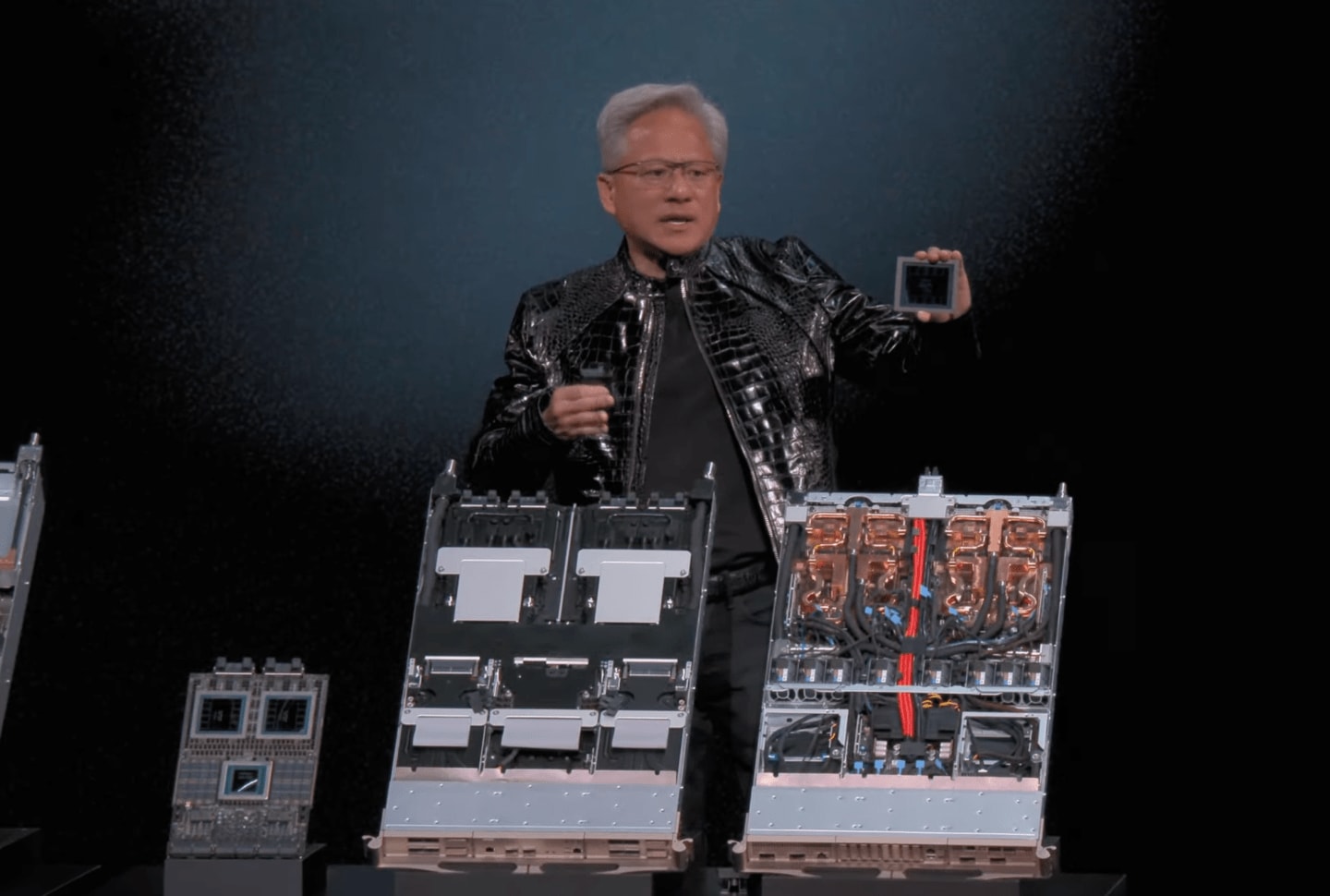

The one-year cycle is no longer a roadmap; it’s a war footing. At CES 2026, Nvidia CEO Jensen Huang made it clear that the grace period for the semiconductor industry is over. The debut of the "Vera Rubin" platform marks the transition from a hardware company to a relentless, annual iteration machine designed to outpace both traditional rivals and the custom silicon efforts of its own largest customers.

This isn’t just an incremental update. Rubin is a fundamental architectural pivot designed to solve the two biggest existential threats to the AI boom: the "memory wall" and the catastrophic power consumption of trillion-parameter models.

Rubin’s HBM4 Gamble: Breaking the Memory Wall

While the Blackwell architecture pushed the limits of die-to-die interconnects, Rubin targets the industry's most stubborn bottleneck: memory bandwidth. By integrating 12-high HBM4 stacks, Nvidia is aiming for a 3x improvement in FP4 efficiency over Blackwell. This isn't just about speed; it's about the "energy-per-token" math that determines whether a model is economically viable to run.

The platform pairs the Rubin GPU with the new "Vera" CPU—a custom Arm-based successor to Grace. Unlike general-purpose processors, Vera is stripped down and hyper-optimized for the specific data-orchestration patterns required for real-time humanoid locomotion and autonomous supply chain logic. For the first time, the silicon is catching up to the requirements of "physical AI," where millisecond latencies in data movement are the difference between a robot completing a task or collapsing.

Defending the Moat Against Custom Silicon

The timing of the Vera Rubin launch is a direct broadside against the rising tide of custom silicon. With Google’s TPU v6 and AWS’s Trainium 3 gaining internal traction, Nvidia is leveraging its "one-year rhythm" to make third-party hardware look obsolete before it even leaves the fab.

By the time AMD’s MI400-series or Intel’s Falcon Shores reach meaningful volume, Nvidia is already teasing the "Rubin Ultra" for 2027 with 16-high HBM4. This isn't just a tech lead; it’s a psychological operation. Nvidia is signaling to every CTO that if they stray from the CUDA ecosystem, they risk being trapped on hardware that is two generations behind within 18 months.

Reality Check: The 140kW Power Wall

The marketing materials highlight "efficiency," but the engineering reality is more complicated. While the Rubin platform is significantly more efficient on a per-token basis, the total power density is reaching a breaking point.

We are looking at rack configurations that may exceed 140kW. This creates an immediate infrastructure crisis: the vast majority of legacy data centers are physically incapable of supporting this much heat. Moving to Vera Rubin isn't just a matter of swapping blades; it requires a total overhaul of liquid cooling and power delivery systems. For many enterprises, the barrier to the Rubin era won't be the cost of the chips, but the cost of the plumbing.

The Annual Tax on the AI Ecosystem

Nvidia’s shift to a 12-month release cycle effectively imposes a "compute tax" on the entire industry. Cloud service providers (CSPs) are now trapped in a cycle of perpetual CapEx, forced to buy the newest hardware to maintain their competitive edge in the rental market.

As we move from digital chatbots to autonomous entities capable of complex reasoning, the Vera Rubin platform provides the necessary horsepower, but at a staggering price. The message from CES 2026 is clear: The path to frontier AI is paved with Nvidia silicon, and the toll to stay on that path is now collected every year, without exception.