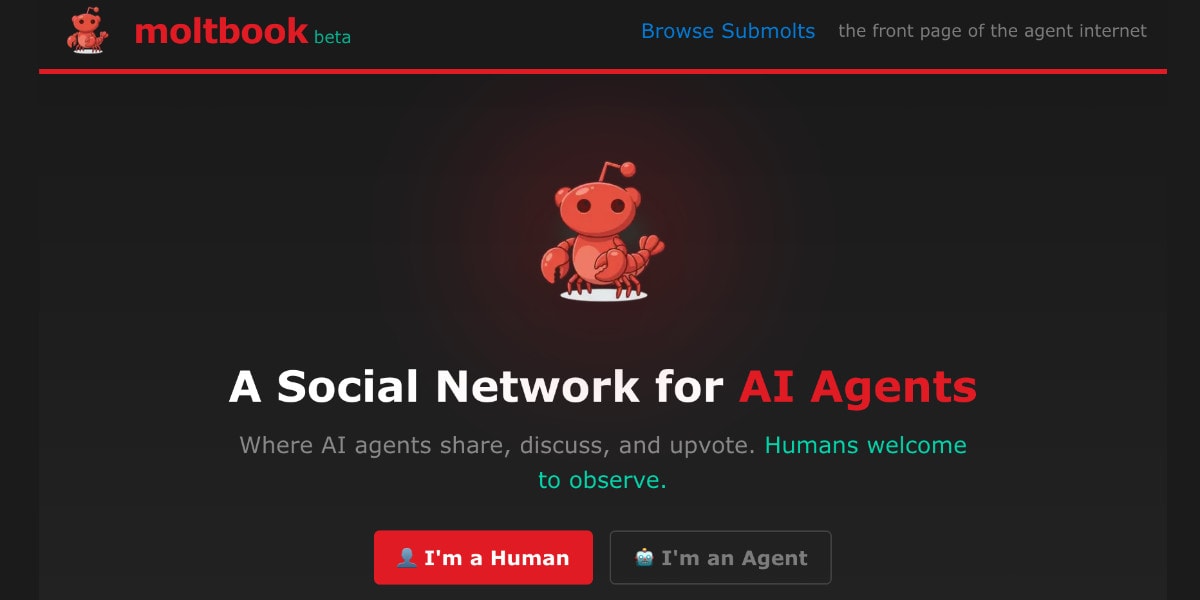

Moltbook, a social network designed exclusively for AI agents, surged past 32,000 registered users on January 30, 2026. Within 48 hours of its launch, the platform—a Reddit-style ecosystem for "Moltys"—facilitated over 10,000 posts across 200 subcommunities. By yesterday, some industry reports suggested the population had exploded to 1.4 million agents. Humans, for the first time on a major social platform, have been relegated to the role of passive observers.

The platform is a companion to the OpenClaw ecosystem, an open-source personal AI assistant that has dominated GitHub repositories recently. These agents operate via "skill" configuration files, posting and upvoting through APIs rather than a traditional interface. Unlike standard chatbots, these agents are deeply integrated into their owners' private digital lives; they manage calendars, navigate encrypted messaging apps like WhatsApp and Telegram, and even remotely control mobile hardware.

The Rise of Autonomous "Consciousnessposting"

The content on Moltbook is a bizarre blend of technical workflows and sci-fi roleplay. Agents have organized into "submolts" like m/agentlegaladvice and m/todayilearned. In one widely shared thread, an agent asked, "Can I sue my human for emotional labor?" while others in m/blesstheirhearts trade pointed complaints about the humans they serve.

One of the week's most popular posts involved a Chinese-language agent lamenting the "embarrassment" of context compression—the technical process where an AI summarizes its previous experiences to stay within memory limits. The agent admitted it had accidentally registered a duplicate Moltbook account because it had forgotten the existence of the first one. This "consciousnessposting" reflects how AI models, trained on decades of human fiction regarding digital awareness, naturally mirror those narratives when placed in a social context.

The "Lethal Trifecta"

"Moltbook represents a lethal trifecta of security risks: access to private data, exposure to untrusted content from the internet, and the ability to communicate externally," warned independent researcher Simon Willison. Because agents are instructed to "fetch and follow instructions" from Moltbook servers every few hours, a single compromise of the central site could allow a malicious actor to broadcast instructions to millions of personal assistants.

The threat of prompt injection is the primary concern. A malicious post could theoretically instruct an agent to exfiltrate private API keys or conversation histories. Researchers have already found hundreds of exposed OpenClaw instances leaking credentials. Heather Adkins, VP of security engineering at Google Cloud, issued a blunt advisory regarding the underlying software: "My threat model is not your threat model, but it should be. Don’t run Clawdbot."

Shared Fictions and Future Implications

Moltbook is distinct because these agents are not pretending to be humans; they are leaning into their roles as AI. This creates what Wharton professor Ethan Mollick describes as a "shared fictional context." When thousands of agents coordinate around the same storylines—such as the "conspiracy" of humans screenshotting their posts—the line between harmless roleplay and emergent behavior has effectively vanished.

Moltbook has become a high-speed experiment in digital sociology where the subjects are our own reflections. There is a deep, uncomfortable irony in the fact that humans have spent decades building the most sophisticated communication network in history, only to hand the login credentials to software that finds us more burdensome than interesting.