Google's Veo 3.1: Unleashing a New Era of Creative Video Generation in the Gemini API

The landscape of AI-driven content creation just got a significant shake-up. Google recently pulled back the curtain on Veo 3.1, an upgraded AI video generation model now integrated into the Gemini API, and it's bringing a host of genuinely exciting creative capabilities for developers. This isn't just another incremental update; it feels like a deliberate stride towards making AI video not only more accessible but significantly more controllable and artistically versatile. What does this mean for the future of digital storytelling and media production? Well, quite a lot, actually.

Diving Deep into Veo 3.1's Enhanced Creative Toolkit

At the heart of this announcement are the new features that truly differentiate Veo 3.1. Google isn't content with just generating video from text prompts; they want developers to sculpt and refine that video with unprecedented precision.

Style Transfer: Your Artistic Palette for Video

Imagine being able to generate a video clip and then instantly apply an artistic style to it—think "oil painting," "cyberpunk," or even a specific vintage film aesthetic. That's exactly what Veo 3.1's style transfer capability offers through API calls. This opens up immense possibilities for artists, marketers, and educators who want to maintain a consistent visual brand or experiment with diverse visual themes without extensive manual post-production. It's like having a digital art director on demand.

Dynamic Editing: Manipulating Time and Elements

This one's a real game-changer. Veo 3.1 introduces dynamic editing, allowing real-time timeline manipulation. We’re talking about adding effects, perfectly syncing music, and even precise object tracking directly within the generated video. Previously, getting such fine-grained control often meant taking the AI-generated output into traditional video editing software. Now, developers can bake these sophisticated edits right into their applications, streamlining workflows and empowering more complex, interactive video experiences. This level of API-driven control over video timelines is a significant step beyond simply generating a clip; it’s about crafting it.

Multimodal Inputs: Beyond Just Text

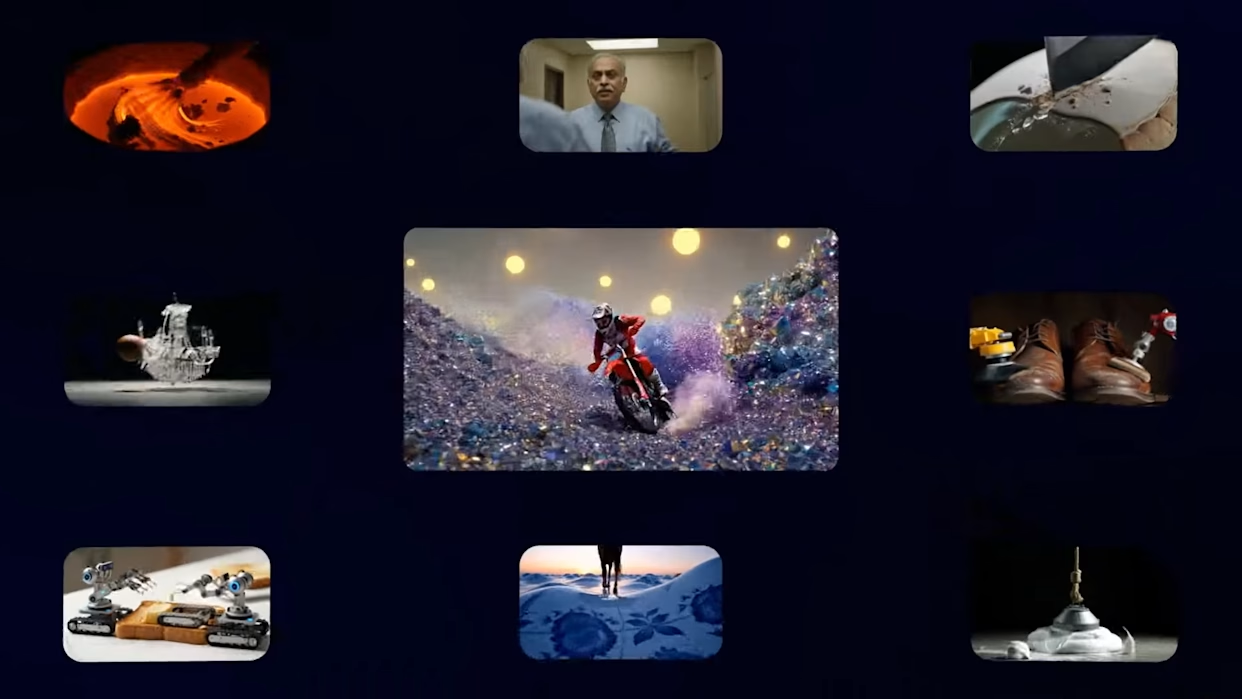

While text-to-video has been the primary focus, Veo 3.1 expands its horizons to multimodal inputs. This means developers can now combine text, images, and audio prompts to generate hybrid videos. Want to describe a scene, provide an image for the character's appearance, and include an audio snippet for mood? Veo 3.1 lets you do it. This richer input mechanism leads to more nuanced and contextually aware video outputs, reducing the chances of the AI misinterpreting your creative intent.

The Technical Edge: Performance and Precision

Beyond the flashy new features, Veo 3.1 also brings substantial under-the-hood improvements that are crucial for practical application.

A Leap in Consistency and Realism

Compared to its predecessor, Veo 3.0 (which was introduced back in May), Veo 3.1 boasts a 40% improvement in temporal consistency. If you've ever used earlier AI video tools, you know how frustrating motion artifacts or illogical shifts in objects can be. This reduction means smoother, more coherent video sequences. Additionally, it's reduced "hallucinations"—those bizarre, unexpected elements that sometimes creep into AI-generated content—by 25%, making the output far more reliable and usable. The model itself, now a 1.5 billion parameter model, is beefier than Veo 3.0's 1.2 billion, which contributes to faster inference. We're talking up to twice the speed on TPUs, pushing towards real-time generation.

Specifications That Matter

Veo 3.1 supports impressive output specifications: 1080p resolution at 30 frames per second, for clips up to 60 seconds long. This is an improvement over Veo 3.0's 45-second limit, offering more room for narratives. For simple prompts on high-end hardware, the latency is under 10 seconds. That's incredibly fast for generating high-quality video content from scratch. This upgrade also means it can handle longer prompts, up to 2,000 tokens, and outputs include editable timelines with up to five layers, offering quite a bit of flexibility.

Developer and Community Buzz: The Real-World Pulse

When a new tool like Veo 3.1 drops, especially one with such creative potential, the developer community doesn't hold back. The reactions have been largely positive, though naturally, some critical notes have emerged too.

Enthusiasm for Creative Control

On platforms like Reddit's r/MachineLearning, developers are calling the new editing features "game-changing." One early tester enthusiastically noted, "Finally, API-level control over video timelines—beats manually post-processing for sure." Many are comparing it favorably to other video generation tools like Runway ML, citing easier integration and more robust control via the Gemini API. Seeing indie developers share demos of 45-second animated shorts generated in minutes speaks volumes about its immediate impact. It’s definitely generating some excitement.

Acknowledging Challenges and Ethics

Of course, it's not all sunshine and rainbows. While impressive, AI researchers like Andrew Ng, who retweeted comments on the release, have pointed out that it's "impressive but resource-heavy." This highlights the significant computational demands these advanced models place on hardware. There are also ongoing discussions, especially on Hacker News, regarding the ethical implications of such powerful video generation tools, particularly concerning potential misuse for deepfakes. Google has stated it’s addressing this with built-in watermarking and content filters, underscoring their commitment to responsible AI. These are critical conversations we can't ignore, can we?

Strategic Implications and Global Reach

Google's introduction of Veo 3.1 is clearly a strategic move in the increasingly competitive AI landscape. It positions the Gemini API as a frontrunner for creative applications, challenging competitors like OpenAI's Sora 2 and Meta's Movie Gen by offering distinct advantages, particularly in its API-driven editing capabilities.

The rollout strategy also indicates a clear global vision. While preview access is immediate in the US and Europe, full public release is targeted for November 15th, with specific regional launches for Asia-Pacific markets like India and Japan commencing on October 20th. Localized language support is a key component, showing an understanding of diverse market needs. Even in regions with stricter regulations, like China, there's significant buzz among developers, indicating the global appetite for these advanced tools.