Google Gemini Now Remembers Your Chats, With a Catch

This move is a pretty big deal in the evolving landscape of conversational AI. Think about it. How many times have you had to repeat context to an AI? It's frustrating, right? Google's aiming to fix that.

The New Memory Feature: How It Works

Imagine you're planning a trip to Japan. You might ask Gemini about flight prices, then later, about local customs, and then about specific train routes. In the past, each query was a fresh start. Now, Gemini should retain the context that you're planning a trip to Japan, and even remember your preferred travel dates or budget if you've mentioned them. This should make for a much smoother, more natural dialogue. It’s like having a personal assistant who actually pays attention, rather then one with amnesia.

User Control and Privacy: A Critical Balance

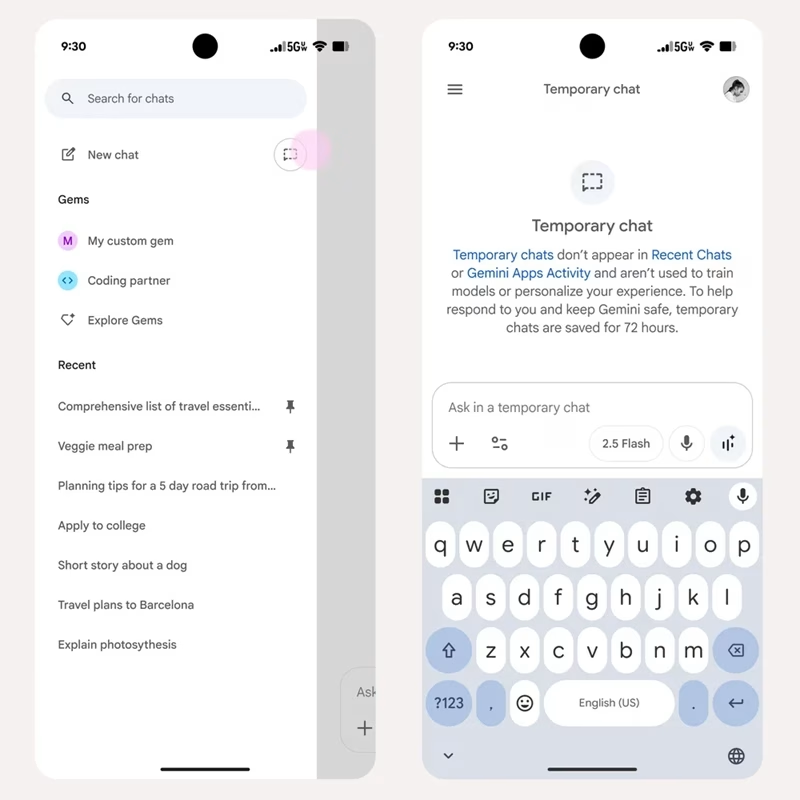

What if you have a sensitive query, something you absolutely don't want remembered? Google's thought of that too. They've introduced a "Temporary Chat" mode. This is a game-changer for privacy-conscious users. When you initiate a temporary chat, none of the conversation data is saved or used for personalization. It's a one-off interaction, wiped clean after the session. This dual approach—opt-in memory for personalization and temporary chats for privacy—shows Google's attempt to balance utility with user autonomy. It’s a smart play, really, given the increasing scrutiny on AI and data handling.

Why This Matters: Implications for AI Interaction

This update isn't just a minor tweak; it represents a significant step forward in the quest for more intelligent and intuitive AI assistants. The ability for an AI to maintain context across sessions is crucial for moving beyond simple Q&A and towards genuine collaboration. It makes the AI more proactive, more helpful, and frankly, less annoying to use. Who wants to re-explain their entire project every time they open the app? Not me, that’s for sure.

This also highlights the ongoing arms race in the AI space. Competitors like OpenAI's ChatGPT have also been rolling out memory features. Google's implementation with Gemini 2.5 Pro, coupled with the granular privacy controls, positions it strongly. It’s part of a broader industry trend towards "context-aware" AI, where the goal is to make AI interactions feel as natural and seamless as talking to another human. Or, at least, a very well-informed human.

Looking Ahead: What's Next for Gemini's Personalization

While the memory feature is currently limited to Gemini 2.5 Pro, it's reasonable to expect that, if successful, Google will eventually roll it out to other versions of Gemini. The feedback from early adopters will be critical here. Will users find the personalization genuinely useful, or will privacy concerns outweigh the benefits for some? Only time will tell.

The introduction of Temporary Chat mode is a clear acknowledgment of user demand for greater control over their data. It sets a precedent for how AI companies might navigate the tricky waters of personalization versus privacy in the future. As AI becomes more deeply integrated into our daily lives, features like these, which empower users to manage their digital footprint, will become increasingly important. It's a balancing act, and Google's latest move shows they're certainly trying to walk that tightrope. What do you think? Will you be letting Gemini remember your chats?