Google Gemini Now Lets You Sketch Your Prompts—and Spot Deepfake Videos

Describing a complex image to an AI has always been a tedious exercise in creative writing. Trying to explain exactly which "small, blue vase in the far-right corner" you want to edit usually results in three failed attempts and a frustrated "never mind." Google is finally addressing this friction.

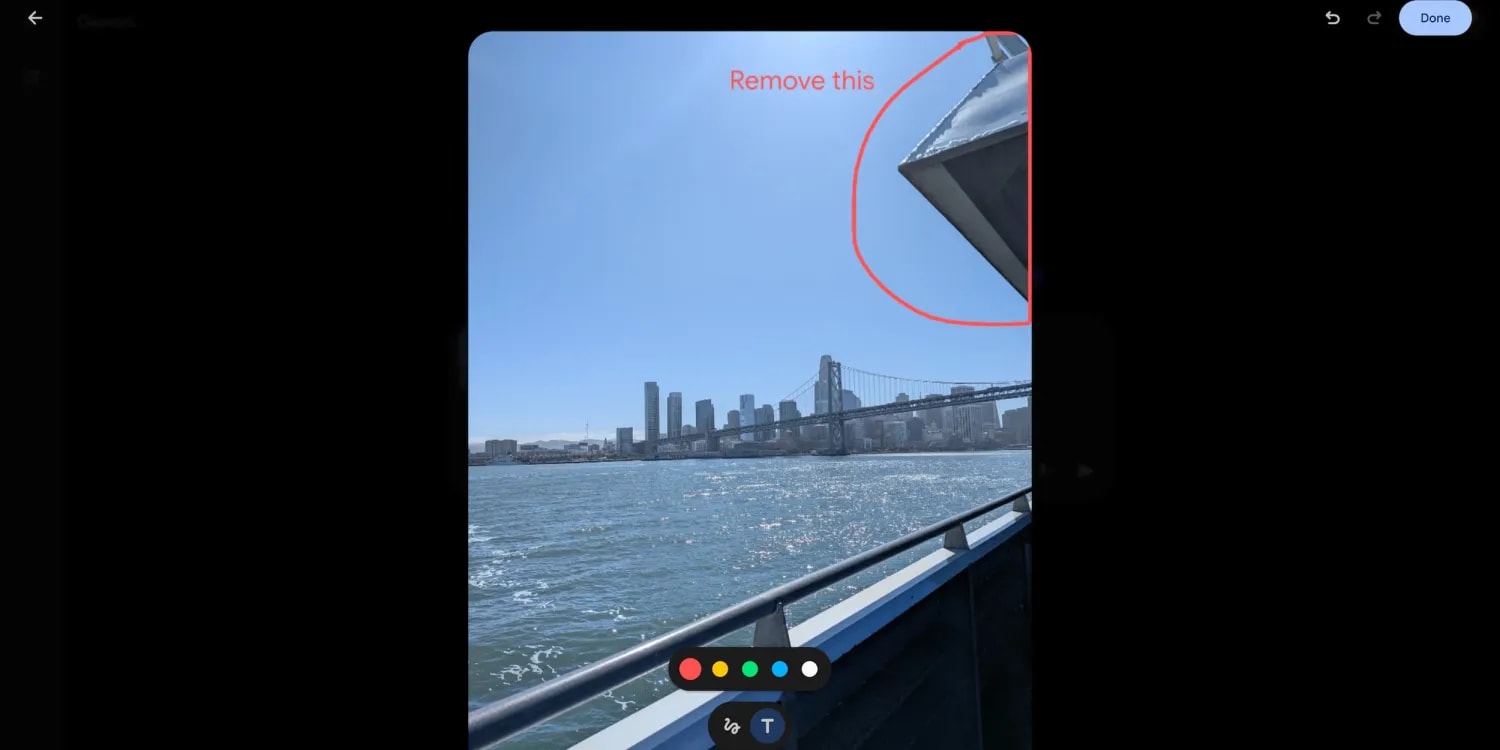

The "Nano Banana" Tool: Circle It, Don't Describe It

The headline feature is a new "Mark up" editor that appears whenever you upload an image to the Gemini prompt box. While the "Nano Banana" name sounds more like a Mario Kart power-up than a productivity tool, it represents Google’s attempt to humanize the interface. It brings a "Snapchat-style" ease to AI prompting.

-

Sketch: Instead of typing a paragraph about a specific architectural detail in a photo, you can simply circle it with your finger and ask, "What style of window is this?"

-

Text: You can overlay written instructions directly onto the canvas, anchoring your prompt to a specific visual point.

By allowing users to provide "visual anchors," Google is reducing the guesswork for its LLMs. This moves the interaction away from a standard chatbot and closer to a collaborative whiteboard.

The Competitive Edge: Gemini vs. Canvas and Image Wand

However, Google’s advantage lies in its mobile-first integration. While OpenAI’s Canvas feels like a desktop-heavy document editor, Nano Banana is designed for the thumb-scrolling reality of mobile users. It’s built for the person taking a photo of a broken bike part or a strange plant on a hike who needs an answer immediately, without the prompt-engineering headache.

Fighting Deepfakes with Video SynthID

As AI-generated media becomes indistinguishable from reality, the "can I trust my eyes?" question has moved from a theoretical concern to a daily necessity. Google’s expansion of SynthID into video and audio is a direct response to the rise of sophisticated deepfakes.

Previously limited to static images, SynthID can now scan video files (up to 90 seconds) to detect Google’s own AI-generated watermarks. The utility here is practical: a user can upload a viral clip of a public figure or a suspicious news snippet and ask, "Was this generated using Google AI?"

The tool provides a granular breakdown rather than a generic disclaimer. It can tell you if the visual track is authentic but the audio has been digitally altered, or pinpoint exactly which 10-second segment contains AI-generated elements. In a high-stakes election year or during breaking news cycles, this level of transparency is a vital, if defensive, tool for digital literacy.

What’s Next: Beyond the Prompt Box

This update is now live globally across Android, iOS, and the web, supporting all Gemini-capable languages. It also bridges the gap with NotebookLM, allowing users to pull these annotated images into their broader research projects.

But the real story is where this leads. By training users to interact with AI through sketches and annotations rather than just text, Google is laying the groundwork for the next generation of hardware. This "visual-first" prompting is a natural precursor to AR glasses, where pointing at a real-world object and "sketching" in the air will likely replace the smartphone screen entirely. For now, the "Nano Banana" is just the first step in making AI feel less like a search engine and more like a pair of eyes.