Apple's iOS 26 FaceTime Feature: A Deep Dive into Privacy, Protection, and Practicality

It seems like every new iOS release brings with it a fresh wave of features, some subtle, others quite impactful. But the latest development in the iOS 26 beta, specifically concerning FaceTime, has certainly caught my attention—and, I imagine, that of many others. Apple has introduced a rather significant safety mechanism: FaceTime will now automatically pause video calls if it detects nudity or other sensitive content. It’s a move that, on the surface, sounds like a straightforward win for privacy, but as with most things in tech, the devil's in the details, isn't it?

This isn't just a minor tweak; it's a proactive step into content moderation within private communication, a space that's traditionally been sacrosanct. And it raises some interesting questions about how we balance user autonomy with digital safety.

The Feature Unpacked: How FaceTime's New Guard Works

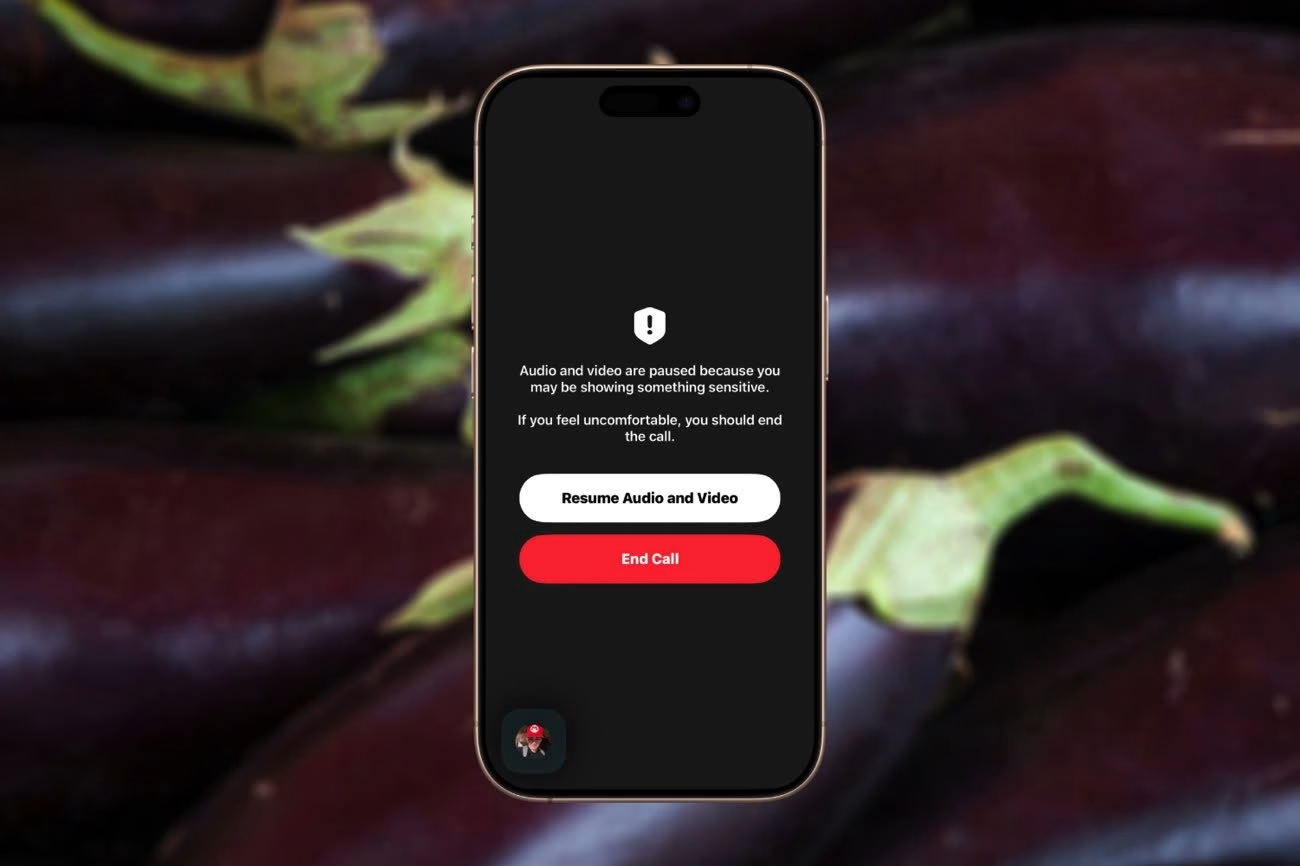

So, what exactly are we talking about here? In simple terms, the iOS 26 beta integrates a new on-device detection system for FaceTime calls. If this system identifies what it deems to be sensitive content—specifically, nudity—it will immediately pause both the video and audio streams. Your screen, and the recipient's, will display a message along the lines of, "Audio and video are paused because you may be showing something sensitive."

Crucially, this isn't a permanent block. The call doesn't just end. Instead, it requires manual intervention to resume. You, the user, have to actively choose to unpause the call. This design choice is quite deliberate, I think, aiming to give control back to the user while still providing that initial protective barrier. It's a bit like having a digital bouncer at the door, but you still hold the key to let yourself back in. This feature, still in its beta phase, clearly signals Apple's ongoing commitment to user safety and privacy, especially in an era where digital interactions are increasingly central to our lives.

Navigating the Nuances of Privacy and Protection

On one hand, this feature is a clear win for privacy and safety, particularly in preventing non-consensual exposure. Think about the scenarios: accidental camera activation, a child inadvertently showing something they shouldn't, or even malicious actors attempting to share unwanted content. This new safeguard acts as an immediate circuit breaker.

It's a direct response to a growing concern about online harassment and the distribution of explicit imagery without consent. Apple has consistently positioned itself as a champion of user privacy, from App Tracking Transparency to end-to-end encryption. This FaceTime update feels like a natural, albeit more direct, extension of that philosophy. It's about protecting users from themselves, yes, but also from others. For many, this will be a welcome layer of security, offering peace of mind during video calls. It adds a safety net, especially for younger users or those who might be vulnerable.

User Experience and Potential Roadblocks

But, and there's always a "but," isn't there? While the intent is noble, the implementation raises some interesting questions about user experience and potential overreach. What constitutes "sensitive content" in the eyes of an algorithm? Will there be false positives? Imagine you're changing clothes quickly, or perhaps a medical professional is demonstrating something for a patient, or even an artist showing a nude drawing. Could these legitimate uses trigger the pause?

The requirement for manual resumption, while empowering, could also be disruptive. A quick, innocent moment could lead to an awkward pause and explanation. Some users might feel this is a step too far, an intrusion into their private conversations, even if the processing happens on-device. It's a delicate balance, trying to protect without patronizing. And, let's be honest, the internet is a vast place. There are legitimate reasons for adults to share sensitive content consensually. How does this feature impact those scenarios? It's a conversation worth having, and the beta period is exactly for ironing out these kinks.

Broader Industry Implications and the Future of Digital Communication

This move by Apple isn't happening in a vacuum. It reflects a broader industry trend towards more stringent content moderation and user protection. As digital platforms become more pervasive, the responsibility of tech companies to ensure a safe environment grows. Apple, with its significant market share and influence, often sets precedents. It wouldn't surprise me if competitors, seeing the positive reception (and perhaps the necessity), begin to explore similar on-device content detection features for their own video communication platforms.

The feature's reception might also vary culturally. In some regions, where views on nudity are more conservative, this might be universally lauded. In others, where there's a stronger emphasis on personal freedom and less on content restriction, it might face more scrutiny. It's a global product, after all, and navigating these diverse perspectives is a constant challenge for tech giants. Ultimately, this isn't just about FaceTime; it's about the evolving social contract between users and the platforms they rely on for communication. We're seeing a shift from purely reactive content moderation to more proactive, on-device preventative measures. It's a complex, but necessary, evolution.

Concluding Thoughts

Apple's new FaceTime feature in iOS 26 is a significant step towards enhancing user safety and privacy in video communication. It addresses a real and pressing concern about non-consensual content and accidental exposure. While it brings undeniable benefits in terms of protection, it also sparks important conversations about algorithmic accuracy, user autonomy, and the potential for disruption in legitimate use cases.

As this feature moves from beta to full release, its real-world impact will become clearer. Will it be a seamless shield, or will it occasionally feel like an overzealous guardian? Only time, and user feedback, will tell. But one thing is for sure: the conversation around digital privacy and content moderation is only just beginning to heat up.