Anthropic to Train Claude on User Data, Sparking Debate on AI Development

The company, known for its focus on AI safety and its "constitutional AI" approach, is reportedly looking to leverage real-world interactions to refine Claude's performance, conversational abilities, and factual accuracy. This strategy isn't entirely new in the AI space; many models undergo continuous learning and fine-tuning based on vast datasets. However, Anthropic's specific methodology and the implications for user data are drawing considerable attention.

The Rationale Behind Data-Driven Training

At its core, the decision to train Claude on user data stems from a desire to create a more robust and helpful AI assistant. Large language models, while powerful, often benefit from exposure to diverse and dynamic conversational patterns that can't always be replicated in static training sets. By analyzing how users interact with Claude – the questions they ask, the feedback they provide, and the nuances of their language – Anthropic aims to:

- Improve conversational flow: Making Claude more natural and intuitive to converse with.

- Enhance factual accuracy: Correcting errors and biases by learning from real-world information exchange.

- Adapt to user needs: Better understanding and anticipating what users are looking for.

- Refine safety protocols: Identifying and mitigating potential harmful outputs through observed usage.

Navigating the Privacy Landscape

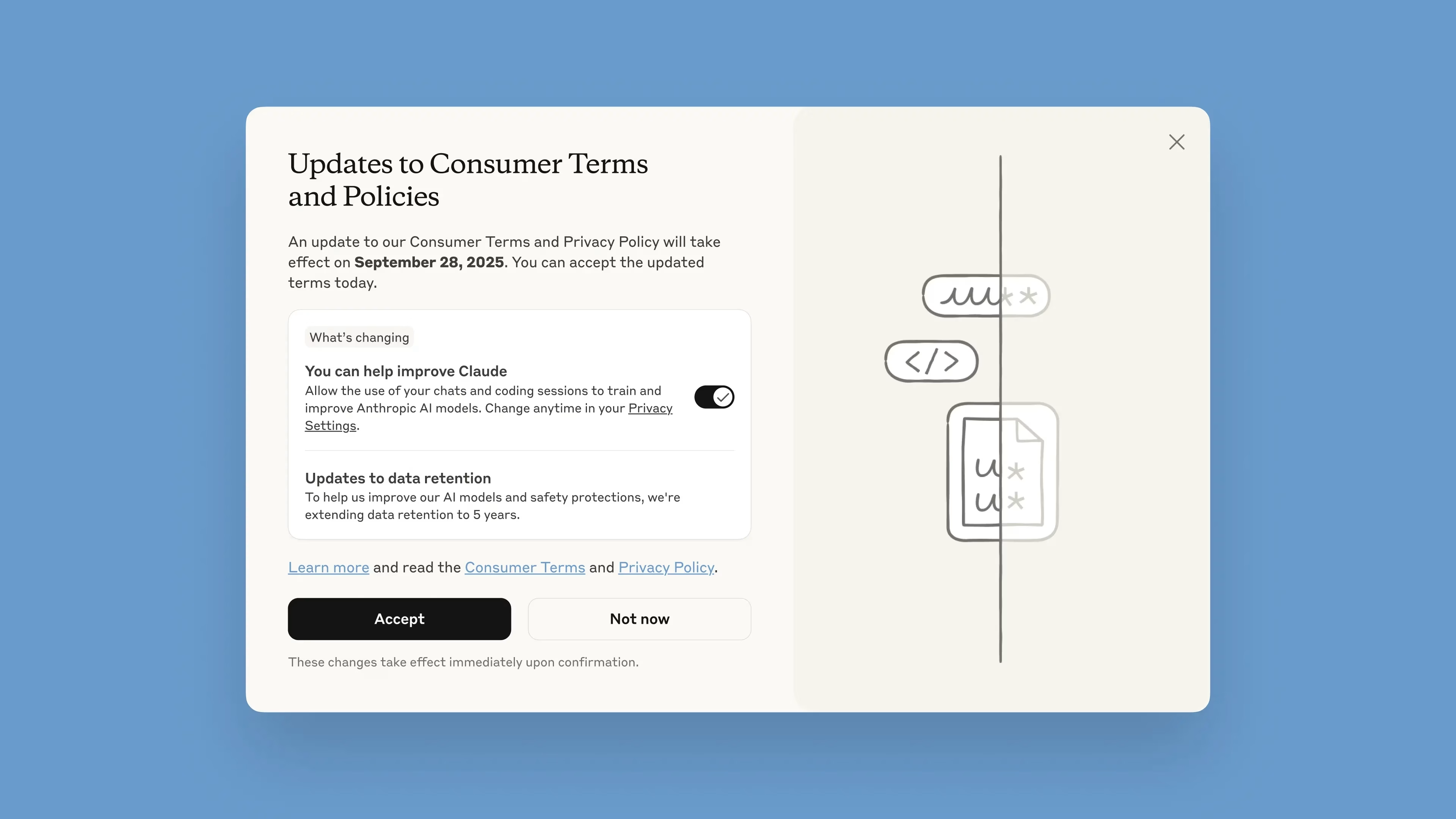

The announcement, however, inevitably brings data privacy concerns to the forefront. While Anthropic has historically emphasized safety and ethical considerations, the prospect of training an AI on user-generated content necessitates a clear understanding of their data handling policies. What specific data will be used? Will it be anonymized? What controls will users have over their data? These are critical questions that need transparent answers.

"We are committed to responsible AI development," a spokesperson for Anthropic stated, "and our approach to data utilization is designed with user privacy and safety as paramount. We're implementing robust anonymization and aggregation techniques to ensure that individual user data is protected."

Industry Reactions and Future Implications

The AI community is watching this development closely. Some see it as a necessary step for creating truly advanced and adaptable AI systems. Others express caution, emphasizing the need for stringent ethical guidelines and user consent.

- Performance Enhancement: Experts believe this could lead to a significant leap in Claude's capabilities, making it more competitive with other leading AI models.

- Ethical Debates: The move reignites discussions around data ownership, algorithmic bias, and the potential for misuse of AI training data.

- Competitive Landscape: As AI models become increasingly sophisticated, the ability to effectively leverage user interaction data could become a major competitive advantage.

One might wonder if this data-driven approach could inadvertently lead to the AI adopting certain user biases. It's a valid concern. If a significant portion of user interactions contain prejudiced language or flawed reasoning, the AI could potentially learn and perpetuate these issues. This is where Anthropic's "constitutional AI" framework, which aims to imbue AI with ethical principles, will be put to the test. Can the constitution effectively guide the AI's learning from the messy, often imperfect, reality of human conversation?

What's Next for Claude?

Anthropic's decision to train Claude on user data is a bold move that reflects the dynamic nature of AI development. The success of this strategy will likely hinge on Anthropic's ability to balance performance gains with unwavering commitment to user privacy and ethical AI principles. As Claude continues to evolve, its interactions with users will be more critical than ever, not just for its own improvement, but for shaping the broader conversation around responsible AI. It's a fascinating space to watch, and one that’s moving at breakneck speed. We'll be keeping a close eye on how this unfolds.