The Abee AI Station: A Deep Dive into AMD's AI Powerhouse Mini PC

You know, it's always fascinating to see how quickly the tech landscape evolves, especially in the realm of compact computing. Just when you think mini PCs have hit their stride, something truly innovative pops up. And that's exactly what we're seeing with the Abee AI Station, a new liquid-cooled mini PC from a Chinese vendor that's making waves by packing AMD's most powerful AI processor into a surprisingly small form factor. It’s not just another small box; it’s a serious contender for local AI inference.

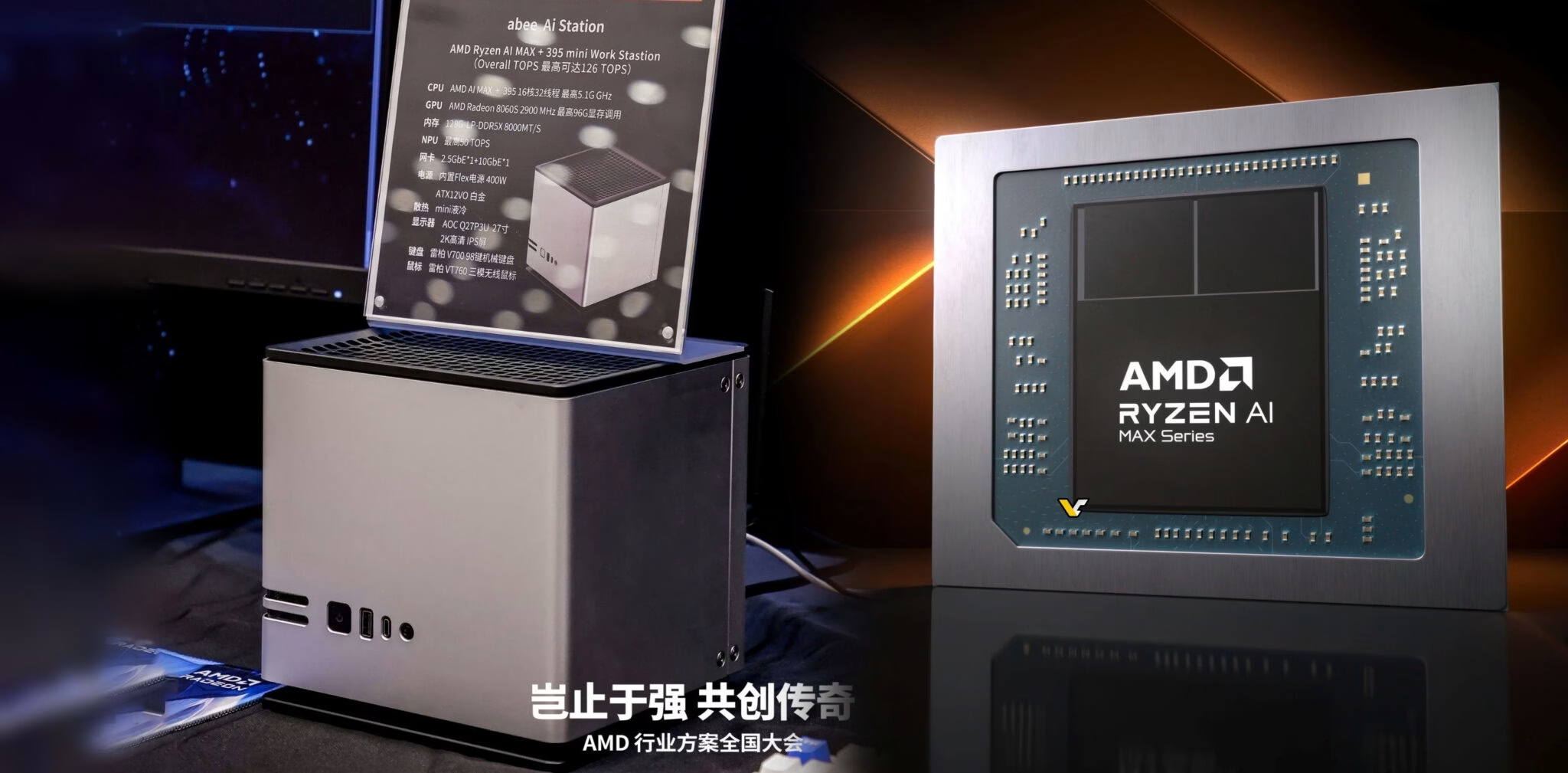

Unpacking the Core: AMD's Ryzen AI MAX 395

At the heart of the Abee AI Station lies the AMD Ryzen AI MAX 395 processor. This isn't just any CPU; it's a beast. We're talking about a 16-core, 32-thread APU that can boost up to a respectable 5.1GHz. For anyone familiar with processor architectures, that's a lot of computational muscle packed into a single chip.

But the real star of the show, especially for its target audience, is its integrated AI engine. This chip reportedly delivers a combined 126 TOPS (Tera Operations Per Second) of AI performance. And get this: over 50 TOPS of that comes from a dedicated Neural Processing Unit (NPU). That's a significant figure, putting it right up there for serious AI and machine learning inference tasks. It means the system can handle complex AI models locally, reducing reliance on cloud services and potentially offering lower latency for real-time applications.

Engineering for Sustained Performance: Liquid Cooling and Robust Power

One of the most striking features of the Abee AI Station, and what truly sets it apart from many mini PCs, is its integrated liquid cooling system. This isn't just for show. When you're pushing a high-performance APU like the Ryzen AI MAX 395 with demanding AI workloads, thermal management becomes absolutely critical. Without proper cooling, components will throttle, performance will drop, and your "powerful" system becomes, well, less powerful.

The Abee AI Station addresses this head-on with dual 92mm fans, a small internal radiator, and a pump mounted directly on the APU. This setup is designed to ensure sustained high performance without succumbing to thermal throttling, which is a common Achilles' heel for compact systems under heavy load. And let's not overlook the power supply. Despite its compact 21x22x15cm footprint, this mini PC incorporates a built-in 400W Flex ATX PSU, which is platinum-rated for efficiency. That's a serious amount of clean, stable power for such a small machine, indicating a commitment to reliability and performance. It also features a custom ATX12VO motherboard, a detail that speaks to its bespoke engineering.

Memory, Storage, and Connectivity: Built for AI Workloads

Beyond the processor and cooling, the Abee AI Station doesn't skimp on other crucial components. It comes equipped with a generous 128GB of LP-DDR5X memory, running at a blazing 8000MT/s. That's a lot of fast RAM, essential for feeding data to those hungry AI models. However, it's worth noting that the RAM is soldered and non-upgradeable. For some, that's a potential drawback, but 128GB is a substantial amount for most current AI inference tasks.

For storage, the system offers dual 56GB modules (an interesting capacity choice, perhaps for specific boot or cache configurations) and a 1TB DOGE unit. Yes, you read that right, a "DOGE unit." While the naming is certainly unique, the 1TB capacity provides ample space for datasets and models. Connectivity is also robust, with dual networking ports offering both 2.5GbE and 10GbE. This high-speed networking is vital for transferring large datasets, a common requirement in AI development. The package even includes support for a 27-inch AOC Q27P3U display, a webcam, and a wireless mechanical keyboard and mouse, making it a complete workstation solution out of the box.

The Broader Picture: Mini PCs and the AI Revolution

The Abee AI Station isn't an isolated incident; it's part of a growing trend. We've seen other Chinese brands, like GMKTec with their EVO-X2 and SDUNITED with the AX835-025FF, also begin to adopt AMD's powerful Strix Halo processor in their mini PCs. This indicates a clear strategic direction for these manufacturers: targeting the burgeoning demand for local AI compute power.

What's particularly interesting is the contrast with major manufacturers such as Asus, Framework, and HP. So far, they've largely limited their use of the Strix Halo chip to larger workstation-class systems, rather than compact desktops. This makes the Abee AI Station and its brethren pioneers in bringing this level of AI performance to the mini PC segment. Whether these mainstream brands will eventually follow suit and bring Strix Halo to their mini PC lines remains an open question, but for now, it's the smaller, more agile vendors who are pushing the boundaries. This level of inference performance truly puts the Abee AI Station in direct contention for the title of best mini PC, especially for AI developers looking for powerful local compute options without cloud reliance.

Concluding Thoughts: A Promising Future for Compact AI

On paper, the Abee AI Station appears to have all the necessary hardware to maintain stable, high performance under sustained AI workloads. Its combination of AMD's most powerful AI processor, sophisticated liquid cooling, and a robust internal power supply is genuinely impressive for its size. Of course, real-world testing will be the ultimate arbiter of its capabilities, but the specifications alone paint a very promising picture.

This mini PC represents a significant step forward for compact AI workstations. It demonstrates that powerful, dedicated AI inference can indeed be achieved in a desktop footprint that's barely larger than a stack of books. For developers, researchers, or even enthusiasts looking to run complex AI models locally, the Abee AI Station could be a game-changer. It's an exciting time for mini PCs, and Abee is certainly making a bold statement.